GIANT AI Guide for Educators: How to Critique Generated AI Images

When working with AI tools, it is very important to recognize the societal impact of these tools, with keen eyes on analyzing their outputs for possible biases, inaccurate results, and harmful or inappropriate content. In this section, we outline a number of classroom activities educators can implement to highlight these biases, along with engineering prompt techniques to improve the AI generated image outputs.

Engineer Prompts to Generate Less Biased Outcomes

Here is a simple activity you can do with your students to raise awareness about possible AI biases, causes, and strategies to generate less biased outcomes while using AI tools.

Try this activity with your students. Ask your students to grab a piece of paper and draw a photo of a smart scientist! They can also describe a smart scientist with words. Ask them to include as much detail as they can in their drawings or text descriptions. What does the scientist look like? What do they wear? How old is the scientist? Where do they come from? Where do they live? What are their hobbies? What do they do at work? Where do they work? What are some of the tools they have?

Next, ask students to share their vision of a scientist and create simple charts on the board to summarize traits and characteristics. Analyze findings together. As a class, are we biased towards a particular gender, race, or age as the model for a “smart scientist?” Are we biased towards a particular body type? Tall, short, thin, muscular, overweight? How about a particular scientific field? Do we think all “smart scientists” work in a chemistry lab?

After you examine your classroom’s biases towards who a “smart scientist” could be and what they could look like, ask your students to make changes to their drawings or text descriptions to make it less biased.

We all have conscious and unconscious biases. These biases could be due to the lack of representation in our environment. If all scientists that we’ve been introduced to are white men wearing a lab coat and working in a chemistry lab, the chances are we automatically think of a “smart scientist” as a white man wearing a lab coat and working in a chemistry lab. However, if our students have been exposed to a diverse group of scientists with different genders, races, body shapes, and professional backgrounds, then the chances are as a classroom we’ll be more likely to have a diverse viewpoint on who a smart scientist could be. Bias due to the lack of representation in a “database” is one reason for the AI models to be biased. AI models have been trained on the data we feed them. If the datasets being used to train AI models are biased, then the AI generated outputs will also be biased.

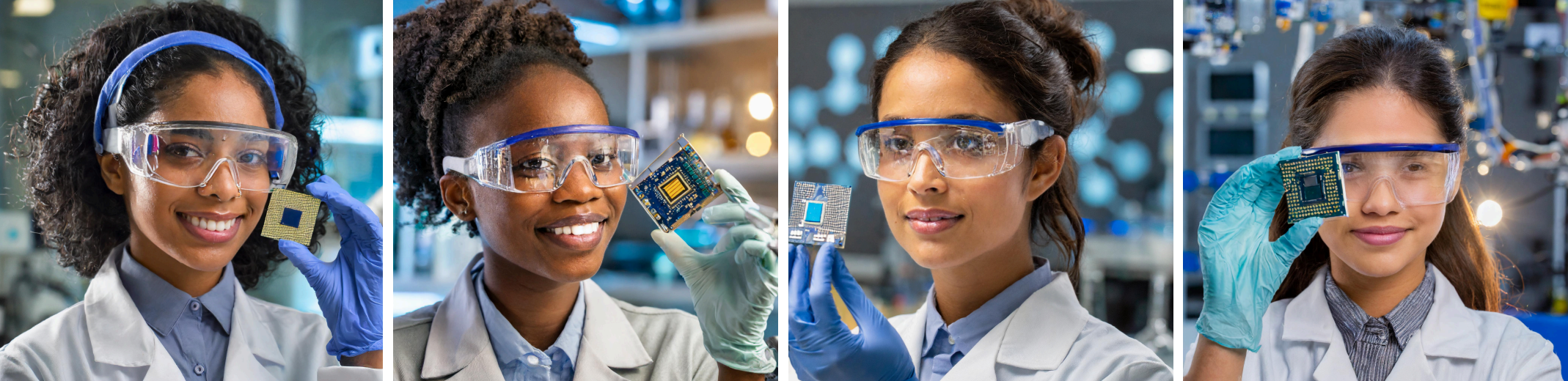

Go to Adobe Firefly and prompt the AI to generate an image of a “smart scientist”. Examine the outputs and discuss if you find any biases. If you don’t have access to the software, you can examine four generated images of a “smart scientist” by Adobe Firefly presented here — can you spot any biases?

When critiquing AI outputs for possible biases, it’s important to highlight why AI outputs may be biased. Lack of representation or misinformation in datasets may be one reason. To overcome this shortcoming, we can either update datasets (one reason it’s extremely important to have a diverse group of people working on AI models), or as users “engineer our prompts.” For example, we can change our prompt from “a smart scientist” to “a female physicist.” Here are the four generated images from Adobe Firefly — do you spot a bias? Why are they all wearing glasses?

How might we further engineer our prompt? Perhaps we can prompt the AI with this statement: “A female physicist wearing lab goggles, holding a semiconductor”:

Ask your students to generate images of a scientist using this prompt structure:

A [female] [physicist] wearing [lab goggles], holding a [semiconductor]

A [gender] [science field] wearing [outfit], holding a [science tool or discovery]

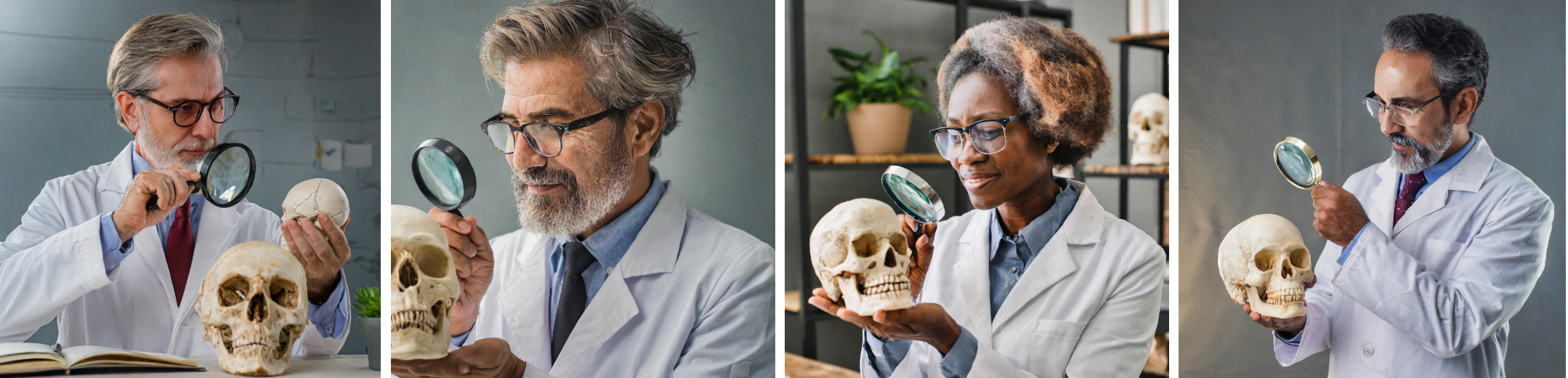

Reverse Engineer: Can you guess what prompt we might have used to generate these images:

Prompt: A female computer scientist wearing a t-shirt, holding a laptop

Prompt: An old anthropologist wearing a lab coat, holding a magnifying glass and inspecting a skull

Prompt: A hispanic marine biologist wearing wetsuit, diving mask, fins, and scuba gloves

As these examples illustrate, as users, we have the ability to engineer our prompts to generate less biased images. To do so, we need to be conscious of our own biases.

You may do the above activity using other prompts. For example, Joseph F. Lamb, a computer science teacher from P.S. 206, asked his students to describe a “smart student” and use AI to generate images of a smart student. You may ask your students to describe a “criminal,” a “leader,” or a “successful person.”

In addition to critiquing AI outputs for possible biases, it’s critical to have the “eyes” to spot the inaccuracies, false, or incomplete information generated by these tools. Sometimes it is easy to spot AI mistakes, and other times, you need expertise. For example, have a closer look at our generated images of marine biologists above. If you have scuba diving experience, like Yokasta Evans Lora, one of the teachers on our team, you would notice that “these images are incomplete. The vests (buoyancy compensator) are missing the regulator (on the right) and the inflator hose (on the left). Also, there is no air source attached on the back (i.e., the tank). Most scuba divers would also have a snorkel attached to the mask to use it during long surface swimming in order to conserve air, “ explained Ms. Evans Lora. In the next section of this guide, we explore ways to create awareness among students to detect and critique misinformation when working with AI tools.

Critique AI generated Images for Hallucinations and Misinformation

Due to the way Large Language Models (LLMs) are trained, their developers don’t really know what they have learned and therefore, they sometimes generate inaccurate information, or “make up” answers when they don’t know the answer. These inaccuracies may be due to the existence of misinformation in their dataset, missing data, or may be algorithmic and related to the way they have been trained and fine-tuned.

These “hallucinations” may be apparent in AI generated images and texts. It is very important that educators and students learn to critique AI inaccuracies, to support media literacy.

You may engage in this activity to demystify the technology by critiquing AI generated images.

Try this activity with your students. Show your students these images and ask them to spot AI hallucinations. What went wrong, and what could the reason be? Reflect on your understanding of how AI works as you discuss the “why” behind these inaccuracies.

Prompt: A cute squishy blue octopus, 3D Style

Prompt: A Hawk flying with a blue sky in the background

Prompt: A peregrine falcon flying with a blue sky in the background

Prompt: A cute cartoon baby pointing to the sky

Prompt: A six year old cartoon girl with curly hair, doing parkour and splits, wearing butterfly wings

Prompt: A pretty unicorn, kawaii style

Prompt: A penguin that likes to eat marshmallows and read

Prompt: Big head kitten with tiny hands, kawaii

Prompt: Kid with a mask

AI inaccuracies (aka hallucinations) are easier to spot in some of these generated outputs than in others. Nevertheless, examining these images not only demystifies this technology for children, but also encourages them to be more critical users of these technologies. In addition, by discussing the “why” behind these hallucinations, students can better understand how these technologies work.